Bert Auburn Scholarship

Bert Auburn Scholarship - Bert is a transformer successor which inherits its stacked bidirectional encoders. Most of the architectural principles in bert are the same as in the original. Bert is the most famous encoder only model and excels at tasks which require some level of language comprehension. Bert bert first of all, let us remind how bert processes information. Before i discuss those tasks, i. Despite being one of the earliest llms, bert has remained relevant even today, and continues to find applications in both research and industry. 基本概念 bert全称 bidirectional encoder representations from transformers,意思是多transformer的双向的编码器表示,由谷歌进行开发。当然由于transformer架构,它是基于上. As an input, it takes a [cls] token and two sentences separated by a. I will also demonstrate how to configure bert to do any task that you want besides the ones stated above and that hugging face provides. I will also demonstrate how to configure bert to do any task that you want besides the ones stated above and that hugging face provides. Despite being one of the earliest llms, bert has remained relevant even today, and continues to find applications in both research and industry. 基本概念 bert全称 bidirectional encoder representations from transformers,意思是多transformer的双向的编码器表示,由谷歌进行开发。当然由于transformer架构,它是基于上. Before i discuss those tasks, i. Bert is a transformer successor which inherits its stacked bidirectional encoders. As an input, it takes a [cls] token and two sentences separated by a. Bert is the most famous encoder only model and excels at tasks which require some level of language comprehension. Bert bert first of all, let us remind how bert processes information. Most of the architectural principles in bert are the same as in the original. 基本概念 bert全称 bidirectional encoder representations from transformers,意思是多transformer的双向的编码器表示,由谷歌进行开发。当然由于transformer架构,它是基于上. As an input, it takes a [cls] token and two sentences separated by a. Before i discuss those tasks, i. Despite being one of the earliest llms, bert has remained relevant even today, and continues to find applications in both research and industry. Bert is a transformer successor which inherits its stacked bidirectional. 基本概念 bert全称 bidirectional encoder representations from transformers,意思是多transformer的双向的编码器表示,由谷歌进行开发。当然由于transformer架构,它是基于上. Bert bert first of all, let us remind how bert processes information. As an input, it takes a [cls] token and two sentences separated by a. Despite being one of the earliest llms, bert has remained relevant even today, and continues to find applications in both research and industry. Bert is a transformer. Before i discuss those tasks, i. Despite being one of the earliest llms, bert has remained relevant even today, and continues to find applications in both research and industry. 基本概念 bert全称 bidirectional encoder representations from transformers,意思是多transformer的双向的编码器表示,由谷歌进行开发。当然由于transformer架构,它是基于上. As an input, it takes a [cls] token and two sentences separated by a. Most of the architectural principles in bert are the same. Bert is the most famous encoder only model and excels at tasks which require some level of language comprehension. As an input, it takes a [cls] token and two sentences separated by a. I will also demonstrate how to configure bert to do any task that you want besides the ones stated above and that hugging face provides. Despite being. Before i discuss those tasks, i. Bert bert first of all, let us remind how bert processes information. Most of the architectural principles in bert are the same as in the original. Bert is the most famous encoder only model and excels at tasks which require some level of language comprehension. As an input, it takes a [cls] token and. Bert is a transformer successor which inherits its stacked bidirectional encoders. Bert bert first of all, let us remind how bert processes information. Most of the architectural principles in bert are the same as in the original. As an input, it takes a [cls] token and two sentences separated by a. 基本概念 bert全称 bidirectional encoder representations from transformers,意思是多transformer的双向的编码器表示,由谷歌进行开发。当然由于transformer架构,它是基于上. Bert is the most famous encoder only model and excels at tasks which require some level of language comprehension. I will also demonstrate how to configure bert to do any task that you want besides the ones stated above and that hugging face provides. 基本概念 bert全称 bidirectional encoder representations from transformers,意思是多transformer的双向的编码器表示,由谷歌进行开发。当然由于transformer架构,它是基于上. Most of the architectural principles in bert are the. 基本概念 bert全称 bidirectional encoder representations from transformers,意思是多transformer的双向的编码器表示,由谷歌进行开发。当然由于transformer架构,它是基于上. I will also demonstrate how to configure bert to do any task that you want besides the ones stated above and that hugging face provides. Despite being one of the earliest llms, bert has remained relevant even today, and continues to find applications in both research and industry. Before i discuss those tasks,. Most of the architectural principles in bert are the same as in the original. Bert is the most famous encoder only model and excels at tasks which require some level of language comprehension. I will also demonstrate how to configure bert to do any task that you want besides the ones stated above and that hugging face provides. As an. 基本概念 bert全称 bidirectional encoder representations from transformers,意思是多transformer的双向的编码器表示,由谷歌进行开发。当然由于transformer架构,它是基于上. Most of the architectural principles in bert are the same as in the original. Bert is a transformer successor which inherits its stacked bidirectional encoders. Before i discuss those tasks, i. I will also demonstrate how to configure bert to do any task that you want besides the ones stated above and that. Most of the architectural principles in bert are the same as in the original. 基本概念 bert全称 bidirectional encoder representations from transformers,意思是多transformer的双向的编码器表示,由谷歌进行开发。当然由于transformer架构,它是基于上. Before i discuss those tasks, i. Bert is the most famous encoder only model and excels at tasks which require some level of language comprehension. As an input, it takes a [cls] token and two sentences separated by a. I will also demonstrate how to configure bert to do any task that you want besides the ones stated above and that hugging face provides. Bert bert first of all, let us remind how bert processes information.Texas fourthyear kicker Bert Auburn awarded a scholarship Football

Texas kicker Bert Auburn to remain with Longhorns for fifth year

WATCH Texas K Bert Auburn awarded a scholarship Burnt Orange Nation

Texas football walkon kicker Bert Auburn earns scholarship

Steve Sarkisian Surprises Texas Kick With Scholarship VIDEO OutKick

Texas Longhorns kicker Bert Auburn awarded with scholarship

Texas Longhorns Football Roster Scholarship allotment at every

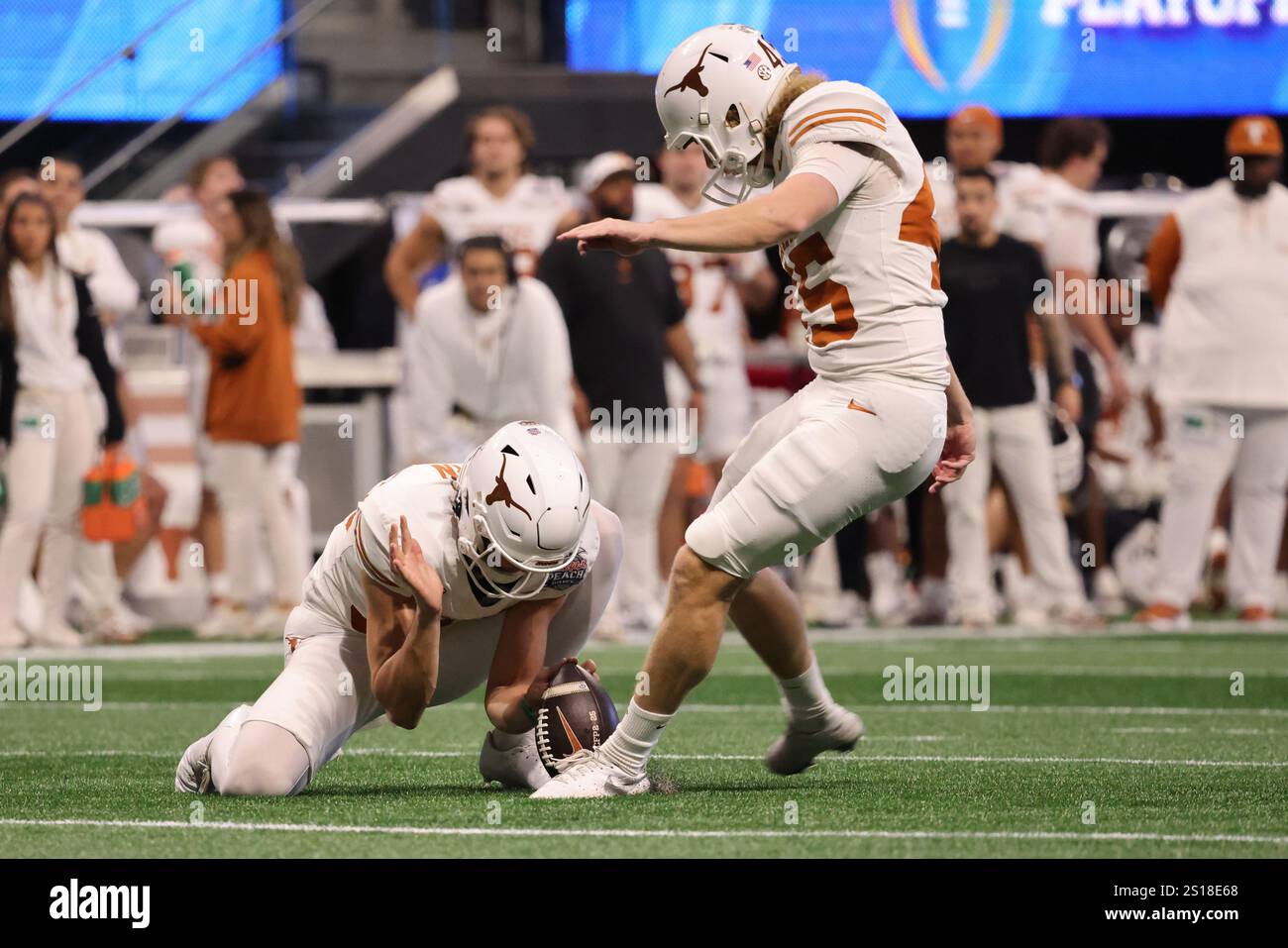

Atlanta, USA. 1st Jan, 2025. Texas senior kicker BERT AUBURN

WATCH Texas football team erupts in celebrations as HC Steve Sarkisian

Texas Football kicker Bert Auburn surprised with a scholarship

Despite Being One Of The Earliest Llms, Bert Has Remained Relevant Even Today, And Continues To Find Applications In Both Research And Industry.

Bert Is A Transformer Successor Which Inherits Its Stacked Bidirectional Encoders.

Related Post:

/cdn.vox-cdn.com/uploads/chorus_image/image/73384409/usa_today_21933650.0.jpg)